If you’re a Unix user, odds are you’re more than familiar with SSH for remote access. However, unless the system you’re connecting to is located in a familiar network and is well known, either trough its IP address or a DNS record, things become a bit more complicated…

How can we have access to an endpoint when we have no knowledge where that endpoint is connected or how it’s connected (behind firewall, NAT, VPN, etc)? Imagine mobile devices, laptops or even unattended systems where we might need to provide some sort of remote support. Not only that, but also how can we secure this communication process, given the fact that we might not be able to ensure the physical security of our endpoint, which means that any stored secret is potentially available to an attacker? And how can we do it with little or no cost?

Probably, the first simple approach would be to restore that “familiar network” environment by adding to our remote system some sort of a dynamic DNS registration process as well as having our remote device SSH port mapped on the internet using UPNP/IGP or some other equivalent process. If we were connecting our device at home, at least… otherwise, the rest of the world is not that simple. Not only we cannot ensure that our device is able to control its router/firewall in any way, but the dependency on a dynamic DNS service, could imply licensing costs as well as account data stored in the device that could be easily exploited by an attacker that gets physical access to the device. Additionally, you’re exposing the device access port on the internet allowing attacks based on any exploit that might exist for the SSH server or any other remote access software you might be using (it is assumed for this article that you’re no longer using passwords for remote access and all authentication is based on either keys or certificates).

Another approach is using persistent VPN connections. A persistent VPN connection could also provide that familiar IP address for the device. It could also provide an encryption layer, even though it would not be needed, since we would already be connecting to the endpoint device using an encrypted connection, provided by SSH. Naturally, in the case of a Windows system, with a RDP or VNC connection, other security constraints may apply. Nevertheless, a persistent VPN connection will give us the necessary means and security to ensure the access to our remote endpoint (assuming it has internet connection). But what are the drawbacks of this approach? The first issue comes with possible layer 3 (routing) constraints that may exist on the network. Since we have no control over the networking rules where the device is connected, we cannot ensure that the remote router would allow, for example, a PPTP or L2TP connection that requires a different encapsulation from TCP. This means that we need a solution that uses a TCP encapsulation and is going to be mapped on port 443, where an eventual transparent proxy or firewall would allow our endpoint device to connect. Using OpenVPN as the software for the VPN connection would easily solve the eventual routing constraints an endpoint might encounter connecting to the internet. Another issue is the fact that a VPN software presents the system a complete IP stack that would require additional firewall programming on the back-end to prevent the client system from having connectivity to all the other endpoints on the remote access network or even allowing the credentials stored in the endpoint device to have any other use than just making that connection to your VPN server. Depending on available resources, firewall software or routers, this could be achieved and, despite its complexity, it would solve the problem of having remote access to the device and proper security at same time. Your main vulnerability would be the bureaucracy required to configure multiple systems (VPN server, firewall, routers), multiple tools or multiple credentials.

Another familiar approach would be using a tool designed for remote access like Teamviewer (LogMeIn or any other like them). In the case of Teamviewer, a white-paper describing the security architecture was published and it shows that it is a very secure system, assuming your level of paranoia allows you to believe what’s written in their security paper. Actually, the major drawbacks of Teamviewer in this context are:

- the product seems to be too focused on the desktop, having to resort to a custom API for scripting purposes or integration with other tools.

- the security paper clearly states that if unattended access is required, a local password can be set to allow access by a remote operator but it doesn’t ensure that this password cannot be extracted from the device, although the paper also states that access policies are digitally signed, so we could speculate that even if this password is compromised, a remote attacker may still not be able to have access to the device. This eventual vulnerability is not a problem if the device is a server, stored in a datacenter, where we would have some form of physical security. But we can only speculate from the contents on the white-paper (Teamviewer was never consulted regarding this).

- last but not least, the licensing cost.

When relying on user based access authorisation, ID plus random PIN, which seems to be the desired mode of operation, Teamviewer can be a very secure tool based on what’s described in their security paper.

Naturally, the only approach that fully addresses the physical security issues discussed here is the use of secure hardware like it is used, for example, by the Payments Card Industry. A device that is PCI certified for card payments has several tampering sensors that will erase all information (especially authentication keys). Even if the devices are booted to some maintenance mode in an attempt to load malicious code, the devices will only accept new code if it’s digitally signed. In the world of PCs and other consumer devices, this level of security it is not mainstream yet. We’re beginning to have some security hardware in products targeted at professional use, like the Trusted Platform Module or, in the consumer world, what Apple calls the Secure Enclave in their iOS devices. These devices have a secure chip embedded that provides a secure storage and encryption processor that allows the implementation of certification protocols for the device. However, in the case of PCs with a TPM, you have to ensure two things:

- the storage in the device must have its content encrypted by the TPM so that we can ensure the integrity of the software (for example, with Bitlocker in Windows)

- the software booted by the device must not allow privilege escalation that would allow the user to override the TPM (Windows?!)

Anyway, things are getting better in the world of PCs… Apple’s solution for the Mac, with FileVault 2 is also good. There is a great paper available on the web with the reverse engineering of Apple’s FileVault. It’s sad though, that Apple still doesn’t support a security device on the Mac, like you can have today on an iPhone or an iPad.

Why SSH?

Short-single-word-answer: ubiquity. SSH is probably the tool that every Unix system administrator will use every day, even with the advent of tools like puppet, chef or any other configuration management tool. It’s well known, well understood, well tested. It’s a reliable tool and it’s free (OpenSSH, at least). It is bundled with every major Unix product on the market: RedHat Linux, SUSE Linux, Ubuntu, Solaris, HP-UX, Apple OSX, etc… and it’s even easily available for Windows. In the case of OpenSSH, it’s open source, so you can download the source and inspect the code before using it. The fact is that SSH, not only gives you the ability to secure a connection but can also act as a communications tool, shaping how that communication is done. It can act as a SOCKS proxy, as a port forwarder (in any direction) or it can limit the type of connection (remote shell, file transfer, X window system). But how can we leverage all these features to solve our problem?

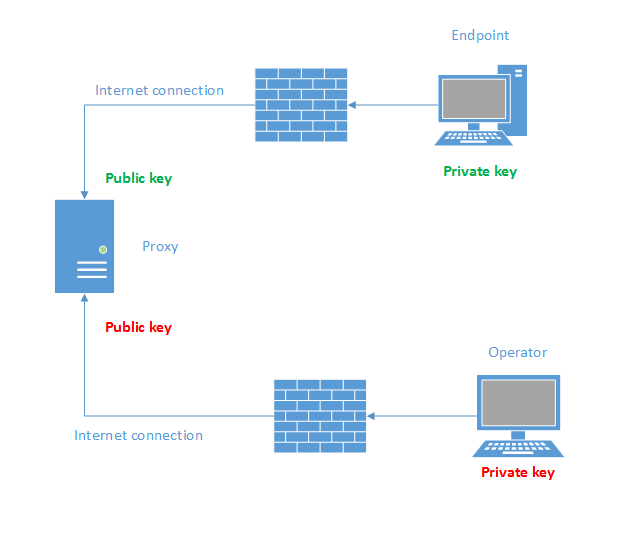

Since we cannot address our endpoint directly, some sort of connection has to start from our endpoint to a known host. Let’s call that known host the proxy. This can be easily achieved with a tool like autossh. autossh will make your link persistent and will provide encryption and key based authentication to the proxy. Of course, the private key stored on the endpoint is vulnerable so the proxy will need to be hardened in a way so that this key doesn’t become a major vulnerability. The autossh connection will also forward a port from the proxy to the endpoint SSH server. This way, you’ll be able to transform an IP address, that you do not know or can’t have access if it’s behind a firewall, in a port mapped to the loopback interface of the proxy. Also, since you’ll be exporting the remote access port to the proxy with an outbound connection, you can disable all inbound traffic on the firewall configuration of the endpoint.

Assuming our endpoint is running Linux, you’ll need to do the following:

- Install and configure the local SSH server

- Setup a SSH config file with the necessary configuration to reach the proxy

- Disable inbound traffic (iptables, ufw or anything else)

- Add the autossh command to your /etc/rc.local or something equivalent:

autossh -M 0 -f -R 10000:127.0.0.1:22 -N proxy

autossh will open port 10000 inside the proxy, without opening any additional ports when using parameters -M 0. Also, it will not setup any remote session with the -N parameter. It will simply forward port 10000 on the loopback interface of the proxy to port 22 on the loopback interface of the endpoint.

You end up with a system like the one depicted below (the walls represent the fact that there will not be any open ports to the outside world on the endpoints):

The operator workstation will have a slightly different setup. Since you will not need a local SSH server, the steps to setup the SSH link to the proxy are:

- Setup the SSH config file with the necessary configuration to reach the proxy

- Disable inbound traffic (iptables, ufw or anything else)

- Add the autossh command to your /etc/rc.local or something equivalent:

autossh -M 0 -f -D 8080 -N proxy

This time autossh will setup a local SOCKS proxy on port 8080 that will allow you perform connections through the proxy. As the remote operator, you will now be able to connect to any of the SSH servers, on each endpoint, that are now available as ports on the loopback interface of the proxy.

So for the example presented above, you could start you typical shell session using the following command:

ssh -F config endpoint

The config file would have the following content:

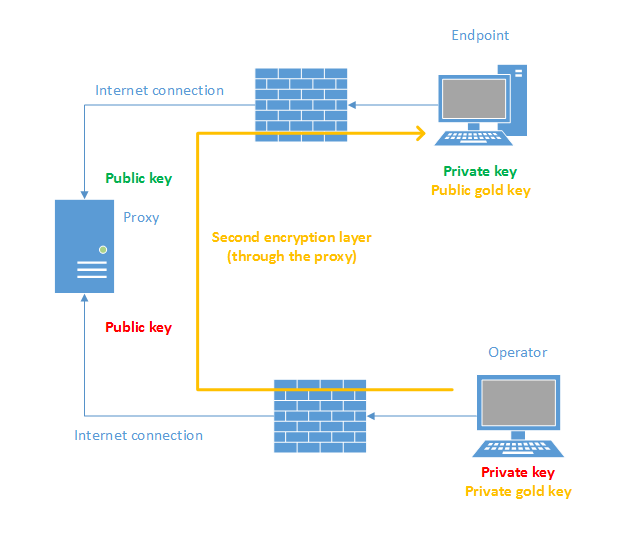

Host endpoint Hostname 127.0.0.1 Port 10000 User operator IdentityFile "gold.key" ProxyCommand /usr/bin/nc -x 127.0.0.1:8080 %h %p

Inspecting the config file you can see there’s a new key. We shall call it the gold key, which is in fact the key that allows you to have access to the endpoint and that restores the remote connection process that you are familiar with. This way, the private key on the endpoint will be used only to allow us to enforce key authentication on the proxy. Also, this private key will be unique to each endpoint making it easy to cut that endpoint from the system if it becomes compromised (stolen, attacked, etc). The gold key, however, can be the same for every endpoint since they will store only the public key. The private gold key is guarded by the remote operator. The following picture shows the final system:

Now that we have secured our endpoints from the network (we shielded them by having no open ports and added two layers of encryption) what we were effectively able to achieve was to turn our proxy system into one big fat target for an attacker. The fact that the proxy connects every endpoint makes it the ideal target. Once you are able to get in, in theory, you can have access to all the devices… assuming you can find something horribly wrong with SSH. As you can see from the picture, the proxy holds no private keys, so even if you have full control the proxy, you will not be able to have access to any endpoint or even eavesdrop on the remote connections since those are protected by the second encryption layer. What an attacker can always do, is deny the service but for that, he or she doesn’t even need to be able to hack the proxy. On the other hand, since the endpoints hold valid credentials as well as the location of the proxy on the internet, it becomes very easy to build an attack if an endpoint is physically compromised. In that case, the attacker gets a set of valid credentials to access the proxy, which brings us to the configuration of the proxy…

The proxy can be a physical system, a virtual machine or a container. If you’re brave, it can even be just another sshd process on any system to save resources. This ssh service will need a specific configuration to prevent the credentials stored on the endpoints from being used for something else than creating that endpoint to proxy connection. As we did before, we will assume our proxy is a Linux system. There are two aspects to the proxy configuration to consider: user accounts for endpoint authentication and the sshd_config file. Regarding the authentication process, the suggested approach is to create a dedicated group for the user accounts, the shell of those accounts should be set to /bin/false and no passwords. For the new sshd configuration the following settings should be added to your typical sshd_config file:

Port 443

Protocol 2

AuthorizedKeysFile /home/keys/%u/authorized_keys

AllowGroups endpoints

Subsystem sftp internal-sftp

PasswordAuthentication no

MaxAuthTries 1

LoginGraceTime 30

PermitRootLogin no

PidFile /var/run/sshd-endpoints.pid

Match Group endpoints

ForceCommand internal-sftp

AllowTcpForwarding yes

ChrootDirectory %h

X11Forwarding no

GatewayPorts no

PermitTunnel no

This configuration by itself is not enough. Additional changes have to be done to each authorized_keys file. As you can see from the suggested configuration, keys are stored in a separate file tree, not accessible to the endpoints. This is needed to prevent endpoints from editing their authorized_keys file as they could if the file would be located in the home directory. Even thought the home directory will be owned by root as it’s required by the ChrootDirectory in the config file, the .ssh directory would not as well as the authorised_keys permissions. The reason for this is that in the suggested configuration, the endpoint will be able to send or receive data to the proxy through SFTP. In a SFTP session, even if the authorized_keys file is set to read only, this setting could be easily changed using chmod and then edited. Each authorized_keys file has to be changed, to add the permitopen parameter. We will use the permitopen parameter to prevent an attacker from opening a different port or even to connect to other systems outside the proxy. For the current example, the authorized_keys would be:

permitopen="127.0.0.1:10000" ssh-........

Some additional parameters suggested in the above configuration are relatively obvious like no passwords and no root access, no protocol downgrades and limits to login attempts to mitigate denial attacks. A second process ID file should be created in case you’re running more than one sshd process in the same system (useful for monitoring, start/stop the service, etc).

Expectations

All the testing was done with OpenSSH 6.6.1 bundled with Ubuntu 14.04. Naturally, the performance will not be as good as it could be… Not only you’ll get significant latency establishing a connection (even if you disable DNS lookups) but you’ll also going to encapsulate TCP inside TCP… Remember that TCP already gives you bad performance when you need to connect to a remote host with high latency or minimal packet loss. But it works. You can have a good perfomance on a remote shell. For a VNC session, if you have enough bandwidth for good VNC performance, the performance should also be good enough with SSH.

Even if the system may be conceptually secure, you should never rule out the possibility of a zero-day vulnerability. No software is flawless and you need a mitigation strategy for a zero-day attack. Here the strategy is simple and obvious: just shutdown the proxy. The proxy is a discardable asset. Kill the sshd process, kill the VM or kill whatever system is running your ssh proxy. Even if you loose access to the system running ssh, you are still able to defend the system by changing the DNS record of the proxy to 127.0.0.1. This will buy you time to act. If a vulnerability is found on the proxy, there’s also the probability of the remote endpoints having the same vulnerability. In some situations, you may not be able to do any periodic maintenance on the remote endpoints, leaving them at the mercy of the security of the proxy. It may be good practice to maintain multiple generations of the proxy or even multiple implementations of sshd for backup. Fortunately, this is very easy to do, thanks to virtualisation.

Vulnerabilities are not limited to the sshd program running on the proxy system. According to the book SSH The Definitive Guide, the SSH protocol allows the connection to be reversed, however, in OpenSSH, this is not allowed by the ssh implementation. It’s hardcoded into de program so there’s another opportunity for an exploit. This is relevant because it would break the security model. If some tweaked version of sshd could establish a connection or even setup some session on the remote endpoint, since it has a valid authentication and a valid connection from the endpoint, we would loose the ability to limit the remote access to owners of the gold key in the event of an attacker being able to penetrate the proxy. The reason we are doing port forwarding and limiting the direction of the connection is to protect ourselves from a potentially hostile environment where a VPN based connection, with layer 3 routing, could leave our system exposed to whatever threat may linger on the remote system or network.

Special care must be given to privilege escalation at the remote endpoint. Again, since you do not have physical control of the device, always assume the system is compromised when you connect to it. Use sudo or any similar process to avoid exposing secrets to the remote system. It doesn’t matter if it’s your grand-mother’s laptop or some customer server locked in some datacenter. If you do not control the physical access to the device, you should regard it as being insecure.

Two things were omitted from the article that will also require special attention: file transfer and key management. In the suggested configuration, the endpoint is allowed to transfer files to the proxy. This can be useful to collect low frequency non-critical data. For example, if you’re running a service desk, having access to the remote network map can be useful when your user claims he or she cannot connect to the printer or some other system… But you’ll also need to ensure that an attacker is not allowed to flood the proxy exhausting the available storage. This protection can be easily implemented with LVM or user quotas. It can also be a good heuristic to alarm you that there’s something wrong when the maximum storage is reached. Another critical aspect, but out of scope for this article, is key generation, distribution and subsequent management.

No doubt SSH is a remarkable tool. I cannot ensure that SSH is the best tool for this challenge of having a secure access to an insecure device in an unknown location but at least, I hope to have triggered your interest in this problem.